Get Started with OutSystems and Amazon Bedrock

How to get started building your next Generative AI application with OutSystems and Amazon Bedrock

In this guide, we will explore the essential steps it takes to build your first or next Generative AI application with Amazon Bedrock in OutSystems. Specifically, you will learn how to set up Amazon Bedrock and your OutSystems application to utilize one or more Foundational Models for Text-to-Text and Text-to-Image generation.

Amazon Bedrock

Amazon Bedrock is a fully AWS managed service that gives you unified access to a selection of foundation models (FMs) from leading AI companies. By the time of writing Bedrock features models from AI21 Labs, Anthropic, Cohere, Stability AI, and Amazon and you can expect more models to be added over time.

Build Generative AI Applications with Foundation Models – Amazon Bedrock – AWS

Amazon Bedrock provides lots of additional and easy to use features. The possibility of privately customizing (fine-tune) any foundation model with your own data without writing a single line of code is just one example. Find out more about Amazon Bedrock features, foundation models and use-cases by clicking on the link above.

Note*that at the time of writing not all additional features are yet available.*

Preparations

Before we delve into the technical aspects, there are some preliminary tasks that need to be addressed.

AWS Bedrock Runtime Forge component and Demo application

Download and install my AWS Bedrock Runtime Integration component from OutSystems Forge.

AWS Bedrock Runtime - Overview | OutSystems

Make sure that you also download the attached Demo application, which we will use to walk through the technical implementation of the Integration component.

Identity and Access Management (IAM) User

To interact with an Amazon Bedrock Foundation Model we need an IAM user account with permission to invoke model APIs.

In the AWS console switch to Identity and Access Management

Create a new policy BedrockInvokeModel with the following permissions

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": "bedrock:InvokeModel",

"Resource": "arn:aws:bedrock:*::foundation-model/*"

}

]

}

This grants permission to invoke any Foundation Model in any region. In a production environment you may want to further restrict this to only specific models and regions.

Create a new user os-bedrock (programmatic access only) and attach the BedrockInvokeModel policy directly to the user.

Next Create an Access Key for the os-bedrock. Make sure to copy the Access Key and Secret Access Key before you proceed. We will need both in a bit.

Amazon Bedrock

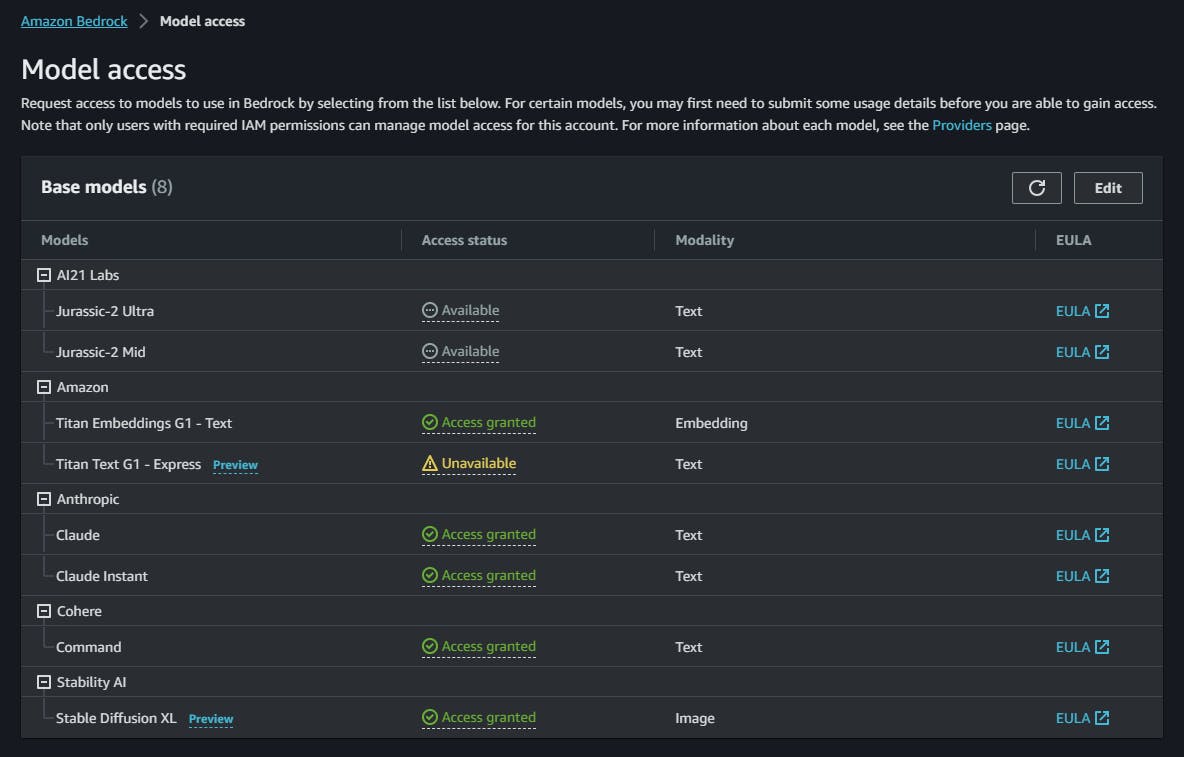

Before you can use a Foundation Model of Amazon Bedrock you need to explicitly request access to a model.

- In AWS console switch to Amazon Bedrock

All features of Amazon Bedrock are made available first in US East N. Virginia (us-east-1) region.

In the left menu select Model Access

Click on Edit and select all Foundation Models you want to request access to.

To try out all examples from the demo application you need to request access to all available models

Note that it can take up to 24 hours until access to a specific model is granted.

Demo Application

Make sure that you have downloaded the Demo application that is attached to the AWS Bedrock Runtime Forge component.

Open Service Center and under Factory — Modules open the AWSBedrockDemoApplication module.

Switch to the Site Properties tab and fill in the values for AWSAccessKey, AWSSecretAccessKey and AWSRegion.

Make sure to use the system name of the region where you provisioned the foundation models (e.g. us-east-1 for US East N. Virginia).

As usual the security reminder here: Secrets shouldn’t be stored in site properties but instead in a secure credentials management solution like AWS Secrets Manager or Hashicorp Vault.

Try the Demo

With all preparational steps completed, you should now be able to try out the demo application.

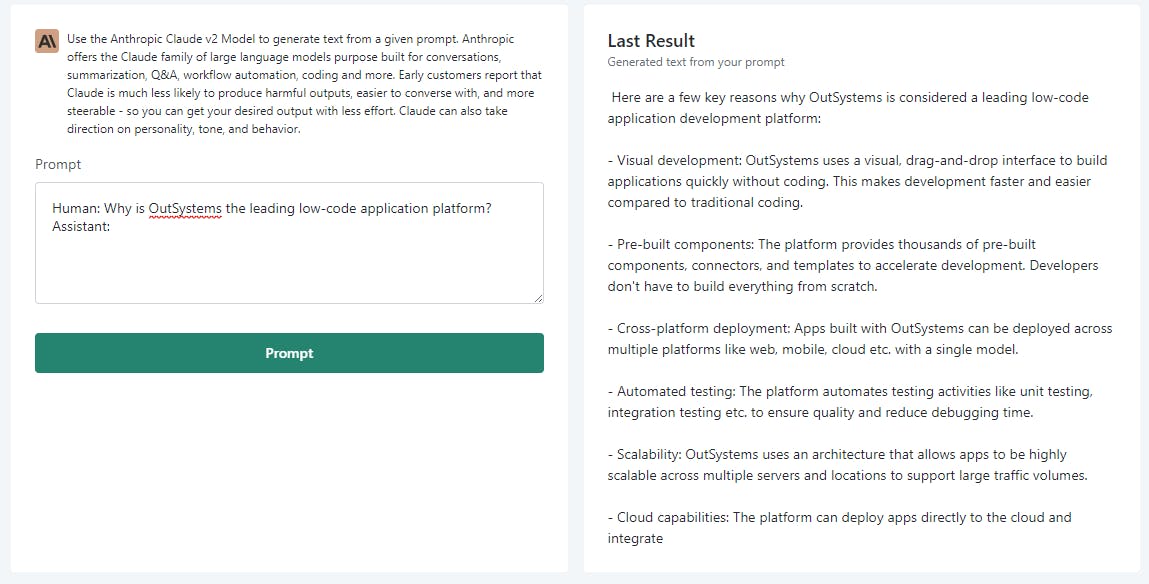

Start with asking Anthropic Claude Version 2 something. For example, “Human: Why is OutSystems the leading Low-Code application platform on the market.

Assistant:”. (Screen: Text)

Make sure to read the Prompt Design Section of Anthropic Claude documentation on how to construct prompts.

Introduction to Prompt Design (anthropic.com)

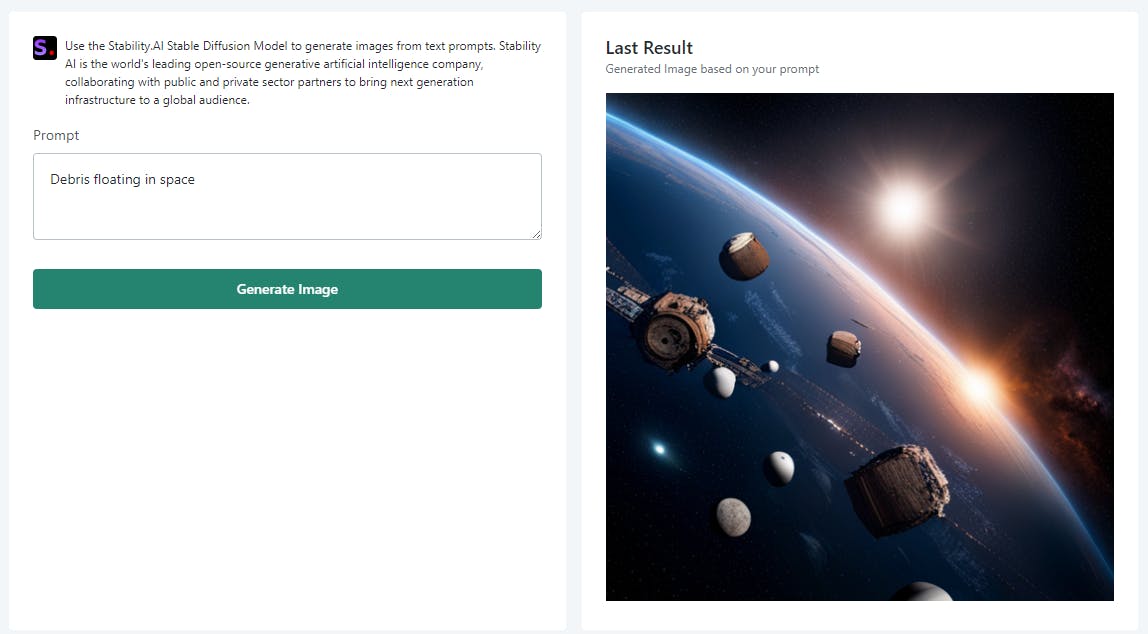

Next generate an image from text with the Stability.AI Stable Diffusion XL model. I used “Debris floating in space”. (Screen Image)

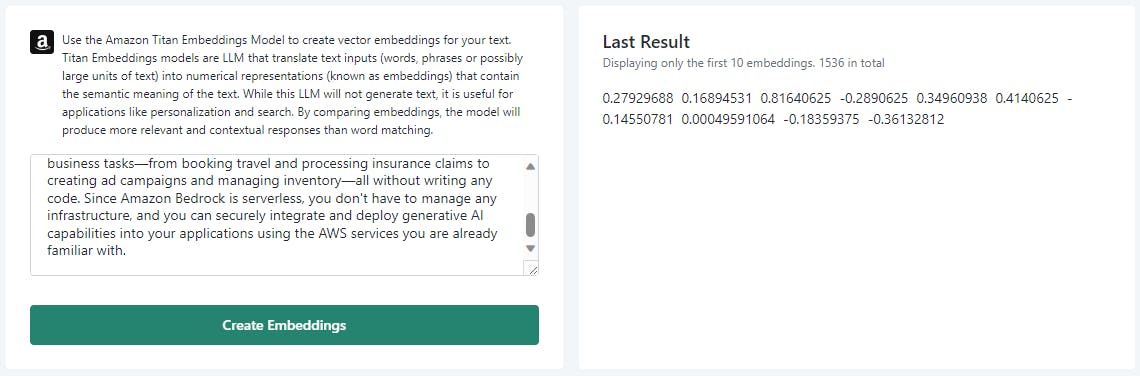

Lastly turn text into vector embeddings of 1536 dimension with Amazons Titan Embeddings G1 model (Screen Embedding). I used the following text:

Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies like AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon via a single API, along with a broad set of capabilities you need to build generative AI applications, simplifying development while maintaining privacy and security. With Amazon Bedrock’s comprehensive capabilities, you can easily experiment with a variety of top FMs, privately customize them with your data using techniques such as fine-tuning and retrieval augmented generation (RAG), and create managed agents that execute complex business tasks—from booking travel and processing insurance claims to creating ad campaigns and managing inventory—all without writing any code. Since Amazon Bedrock is serverless, you don't have to manage any infrastructure, and you can securely integrate and deploy generative AI capabilities into your applications using the AWS services you are already familiar with.

The demo outputs only the first ten dimensions. Titan Embeddings G1 generate vector embeddings with a fixed length of 1536 dimension.

Vector embeddings are essential for adding semantic search and Retrieval Augmented Generation. You can read more about the two topics in my articles

Technical Walkthrough

One of the major advantages of Amazon Bedrock is that it unifies accessing multiple foundation models. If you know how to interact with one model, you know how to interact with all models — besides some model specific parameters.

The AWS Bedrock Runtime Forge component abstracts the unified model invocation API of Bedrock making it extremely simple to use a foundation model.

Open the Demo application in Service Studio

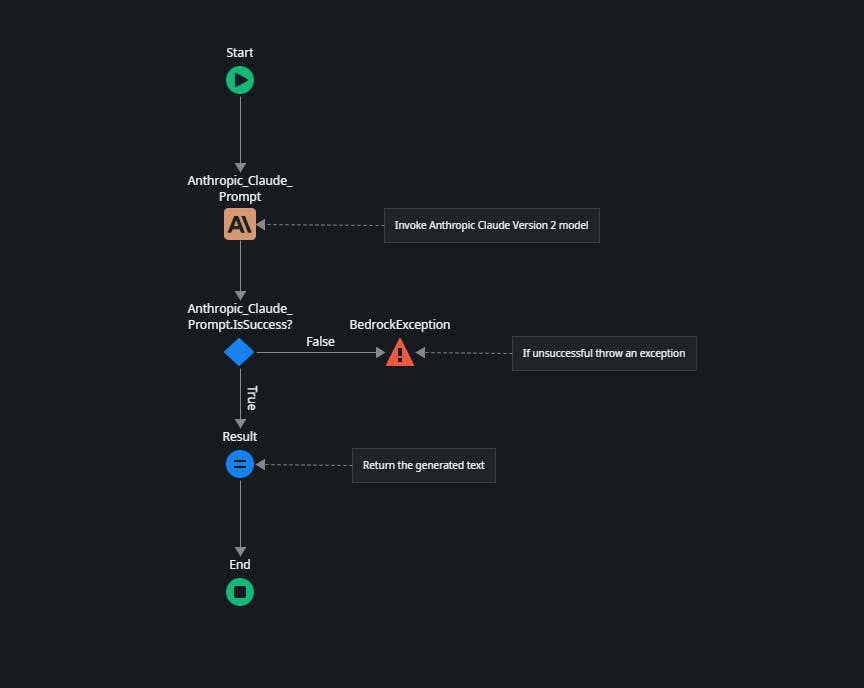

In the Logic Tab under Server Actions open the AnthropicClaudePrompt.

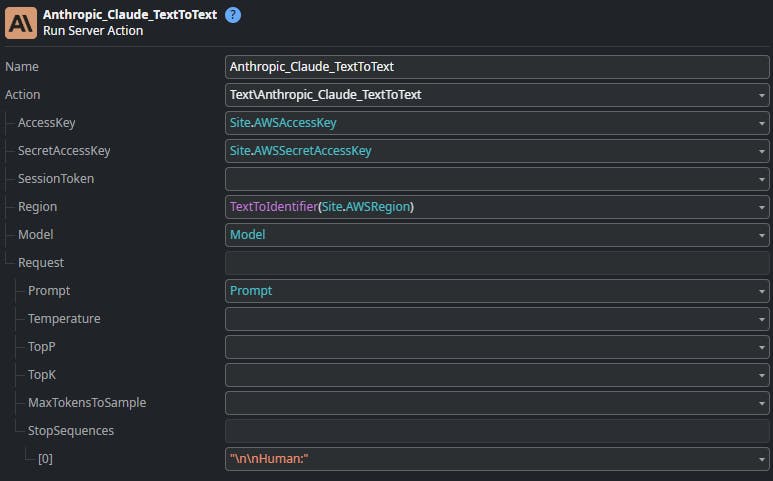

The AnthropicClaudePrompt server action wraps the Anthropic_Claude_TextToText server action from the AWS Bedrock Runtime component (AWS_BedrockRuntime_IS module).

We use this one as an example as all other server actions from the AWS_BedrockRuntime_IS follow the same approach.

The wrapper server action takes a single input parameter Prompt which is the text you entered in the default screen of the demo application and outputs a Result which is the generated text displayed next to the textarea.

- The wrapper executes the Anthropic_Claude_TextToText server action from the AWS_BedrockRuntime_IS module first which in turn invokes the corresponding Bedrock API.

All model operations from the AWS Bedrock Runtime Forge component need your AWS credentials to authorize your request. You can choose between

IAM credentials — AccessKey and SecretAccessKey from a created IAM user account with programmatic access.

STS credentials — Temporary credentials by assuming an IAM role with AccessKey, SecretAccessKey and SessionToken.

STS credentials are a more advanced topic, but strongly recommended. My Forge Component AWS Security Token Service can be used to assume a role and retrieve temporary credentials.

AWS Security Token Service - Overview | OutSystems

In addition, you must specify the Region where you provisioned Foundation Models. The AWS Bedrock Runtime Forge component contains a static entity to select the region.

The Model input parameter specifies which Claude Version to use. For Anthropic Claude models you can specify one of the following values:

claude-v2:1

claude-v2

claude-v1

claude-instant-v1

All model-specific parameters can be found in the Request structure. Parameters differ from provider model to provider model, and you will find information about the various parameters in the AWS Bedrock documentation.

Inference parameters for foundation models - Amazon Bedrock

The rest of the wrapping server action should be self-explaining.

Next, open the PromptOnClick client action of the Demo screen that calls the wrapper.

The form value of the text area is passed as an input parameter and the result of the server action is then assigned to the Result attribute of the form structure.

Model Values

Some of the server actions accept a specific model version as input parameters. Here is a complete list of all possible values

Anthropic_Claude2_TextToText

claude-v2:1

claude-v2

claude-v1

claude-instant-v1

Anthropic_Claude3_TextToText

claude-3-haiku-20240307-v1:0

claude-3-sonnet-20240229-v1:0

AI21_Jurassic_TextToText

j2-ultra-v1

j2-mid-v1

Amazon_Titan_TextToText

titan-text-lite-v1

titan-text-express-v1

Cohere_Command_TextToText

command-text-v14

command-light-text-v14

Meta_Llama_TextToText

llama2-13b-chat-v1

llama2-70b-chat-v1

Stability_StableDiffusion_TextToImage

stable-diffusion-xl-v0

stability.stable-diffusion-xl-v1

Cohere_Command_TextToEmbeddings

embed-english-v3

embed-multilingual-v3

Summary

Amazon Bedrock together with the AWS Bedrock Runtime Forge component provides easy access to multiple foundation modules. With only a few preparational steps you are up and running in minutes to build your own Generative AI application.

You can expect that both the Integration Forge component and the Demo application evolve over time.

Thank you for reading. I hope you liked it and that i have explained the important parts well. Let me know if not 😊

If you have difficulties in getting up and running, please use the OutSystems Forum to get help. Suggestions on how to improve this article are very welcome. Send me a message via my OutSystems Profile or write a comment.